langchain系列(八)- LangGraph Quickstart

本文以LanGraph官网的快速开始为引导,按照自己的想法进行修改,详细介绍相关的知识点,实现整个流程

目录

一、导读

环境:OpenEuler、Windows 11、WSL 2、Python 3.12.3 langchain 0.3 langgraph 0.2

背景:前期忙碌的开发阶段结束,需要沉淀自己的应用知识,过一遍LangGraph

时间:20250228

说明:技术梳理,LangGraph 快速入门教程的实现,基于官方文档,但是使用方法之类并未完全按照官方文档,只以官方文档作为模板进行代码实现

官方文档地址:LangGraph Quickstart

二、代码实现

入门教程实现了基本聊天功能、工具调用、添加记忆功能、人机协作(中断)、自定义state、时间旅行功能,此处依据现有条件进行代码实现并详解。之前的代码不在赘述,会以注释的方式说明

1、基本聊天功能

借用LangGraph第一篇中的代码实现,聊天机器人代码

(1)代码实现

from langchain_openai import ChatOpenAI

from langgraph.graph import StateGraph, START, END

from langgraph.graph.message import MessagesState

import os

# 指定大模型的API Key 等相关信息

llm = ChatOpenAI(

base_url="https://lxxxxx.enovo.com/v1/",

api_key="sxxxxxxxwW",

model_name="qwen2.5-instruct"

)

# 添加LangSmith的API Key

os.environ["LANGCHAIN_TRACING_V2"] = "true"

os.environ["LANGCHAIN_API_KEY"] = "lsv2_pt_1dee022xxxxxxxxxx05954"

os.environ["LANGCHAIN_PROJECT"] = "chatbot_test"

# 定义图

graph_builder = StateGraph(MessagesState)

# 定义节点

def chatbot(state: MessagesState):

return {"messages": [llm.invoke(state["messages"])]}

# 在图中添加节点

graph_builder.add_node("chatbot", chatbot)

# 指定起始边

graph_builder.add_edge(START, "chatbot")

# 指定结束边

graph_builder.add_edge("chatbot", END)

graph = graph_builder.compile()

# 以流式调用图

def stream_graph_updates(user_input: str):

for event in graph.stream({"messages": [{"role": "user", "content": user_input}]}):

for value in event.values():

print("Assistant:", value["messages"][-1].content)

# 设置聊天退出方法

while True:

try:

user_input = input("User: ")

if user_input.lower() in ["quit", "exit", "q"]:

print("Goodbye!")

break

stream_graph_updates(user_input)

except:

# fallback if input() is not available

user_input = "What do you know about LangGraph?"

print("User: " + user_input)

stream_graph_updates(user_input)

break

上述代码实现的功能:定义一个基础的聊天图,可以无限聊天,退出方法是输入q、quit、exit三种方式。

(2)输出

User: hello

Assistant: Hello! How can I assist you today?

User: q

Goodbye!

2、添加工具

用我们之前实现的工具添加进来,LangChain 实现tool的功能,看下tool在LangGraph中的用法

众所周知,模型能回答的问题是训练人员喂给的数据来决定的。其中,今天的天气它肯定不知道的,所以我们添加自定义的获取天气的工具,以实现增强聊天机器人的功能

(1)代码实现

from langchain_openai import ChatOpenAI

from langchain_core.tools import tool

from langgraph.graph import StateGraph, START, END, MessagesState

from langgraph.prebuilt import ToolNode

import os

# 指定大模型的API Key 等相关信息

llm = ChatOpenAI(

base_url="https://lxxxxx.enovo.com/v1/",

api_key="sxxxxxxxwW",

model_name="qwen2.5-instruct"

)

# 添加LangSmith的API Key

os.environ["LANGCHAIN_TRACING_V2"] = "true"

os.environ["LANGCHAIN_API_KEY"] = "lsv2_pt_1dee022xxxxxxxxxx05954"

os.environ["LANGCHAIN_PROJECT"] = "chatbot_test"

# 定义图

graph_builder = StateGraph(MessagesState)

# 定义工具节点

@tool

def get_weather(location: str):

"""获取当前城市天气。"""

if location in ["上海", "北京"]:

return f"当前{location}天气晴朗,温度为21℃"

else:

return "该城市未知,不在地球上"

@tool

def get_coolest_cities():

"""获取中国最冷的城市"""

return "黑龙江漠河气温在-30℃"

tools = [get_weather, get_coolest_cities]

tool_node = ToolNode(tools)

llm_with_tools = llm.bind_tools(tools)

# 定义节点

def chatbot(state: MessagesState):

return {"messages": [llm_with_tools.invoke(state["messages"])]}

# 定义条件选择是否执行工具

def should_continue(state: MessagesState):

messages = state["messages"]

last_message = messages[-1]

if last_message.tool_calls:

return "tools"

return END

# 在图中添加节点

graph_builder.add_node("chatbot", chatbot)

graph_builder.add_node("tools", tool_node)

# 指定起始边

graph_builder.add_edge(START, "chatbot")

# 添加条件边

graph_builder.add_conditional_edges("chatbot", should_continue, ["tools", END])

# 指定结束边

graph_builder.add_edge("tools", "chatbot")

graph = graph_builder.compile()

qa_async_png = graph.get_graph().draw_mermaid_png()

with open("chatbot.png", "wb") as f:

f.write(qa_async_png)

# 以流式调用图

def stream_graph_updates(user_input: str):

for event in graph.stream({"messages": [{"role": "user", "content": user_input}]}):

for value in event.values():

print("Assistant:", value["messages"][-1].content)

# 设置聊天退出方法

while True:

try:

user_input = input("User: ")

if user_input.lower() in ["quit", "exit", "q"]:

print("Goodbye!")

break

stream_graph_updates(user_input)

except:

# fallback if input() is not available

user_input = "What do you know about LangGraph?"

print("User: " + user_input)

stream_graph_updates(user_input)

break

(2)输出

User: 你好

Assistant: 你好!有什么可以帮您的吗?如果您有任何问题或需要帮助,欢迎告诉我。

User: 北京的天气怎么样

Assistant:

Assistant: 当前北京天气晴朗,温度为21℃

Assistant: 当前北京天气晴朗,温度为21℃。 如果您需要更多详细信息,请告诉我。

User: 台湾的天气怎么样

Assistant:

Assistant: 该城市未知,不在地球上

Assistant: 看起来在尝试获取台湾的天气时遇到了一些问题,可能是因为输入的位置信息不明确。如果您能提供更详细的城市名称,我将很高兴再次尝试。

User: q

Goodbye!

(3)分析

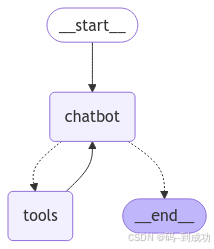

工具节点:工具节点使用与LangChain类似,仅使用LangGraph提供的ToolNode将其封装为node,然后添加节点,并添加条件边,以便于能够走到该节点。其流程图如下:

条件边说明

def should_continue(state: MessagesState):

messages = state["messages"]

last_message = messages[-1]

if last_message.tool_calls:

return "tools"

return END

该代码实现了条件的选择功能,如果最后一条消息存在tool_calls属性,则执行tool_node,否则结束

当然,对于工具而言,LangGraph提供了tools_condition方法,可以直接实现条件边的功能。即:不需要should_continue方法,直接引用tools_condition,并在添加条件边的地方替换即可,如下:

from langchain_openai import ChatOpenAI

from langchain_core.tools import tool

from langgraph.graph import StateGraph, START, END, MessagesState

from langgraph.prebuilt import ToolNode, tools_condition

import os

# 指定大模型的API Key 等相关信息

llm = ChatOpenAI(

base_url="https://lxxxxx.enovo.com/v1/",

api_key="sxxxxxxxwW",

model_name="qwen2.5-instruct"

)

# 添加LangSmith的API Key

os.environ["LANGCHAIN_TRACING_V2"] = "true"

os.environ["LANGCHAIN_API_KEY"] = "lsv2_pt_1dee022xxxxxxxxxx05954"

os.environ["LANGCHAIN_PROJECT"] = "chatbot_test"

# 定义图

graph_builder = StateGraph(MessagesState)

# 定义工具节点

@tool

def get_weather(location: str):

"""获取当前城市天气。"""

if location in ["上海", "北京"]:

return f"当前{location}天气晴朗,温度为21℃"

else:

return "该城市未知,不在地球上"

@tool

def get_coolest_cities():

"""获取中国最冷的城市"""

return "黑龙江漠河气温在-30℃"

tools = [get_weather, get_coolest_cities]

tool_node = ToolNode(tools)

llm_with_tools = llm.bind_tools(tools)

# 定义节点

def chatbot(state: MessagesState):

return {"messages": [llm_with_tools.invoke(state["messages"])]}

# 在图中添加节点

graph_builder.add_node("chatbot", chatbot)

graph_builder.add_node("tools", tool_node)

# 指定起始边

graph_builder.add_edge(START, "chatbot")

# 添加条件边

graph_builder.add_conditional_edges("chatbot", tools_condition, ["tools", END])

# 指定结束边

graph_builder.add_edge("tools", "chatbot")

graph = graph_builder.compile()

qa_async_png = graph.get_graph().draw_mermaid_png()

with open("chatbot.png", "wb") as f:

f.write(qa_async_png)

# 以流式调用图

def stream_graph_updates(user_input: str):

for event in graph.stream({"messages": [{"role": "user", "content": user_input}]}):

for value in event.values():

print("Assistant:", value["messages"][-1].content)

# 设置聊天退出方法

while True:

try:

user_input = input("User: ")

if user_input.lower() in ["quit", "exit", "q"]:

print("Goodbye!")

break

stream_graph_updates(user_input)

except:

# fallback if input() is not available

user_input = "What do you know about LangGraph?"

print("User: " + user_input)

stream_graph_updates(user_input)

break

此处注意:使用该方法,则工具节点必须命名为tools,即:

graph_builder.add_node("tools", tool_node)

3、添加记忆能力

(1)记忆对比

查看当前应用的记忆能力,以下是测试输出

User: 我的名字叫张三

Assistant: 很高兴认识你,张三。你可以问我任何问题,我会尽力帮助你。

User: 我的名字?

Assistant: 您还没有告诉我您的名字。您可以告诉我,以便我能够更好地与您交流。例如,您可以说:“我的名字是李华。”

添加记忆功能后,我们来观察其输出效果

User: 我的名字叫张三

Assistant: 很高兴认识你,张三!如果你有任何问题或者需要帮助的地方,无论是关于北京的信息、生活建议还是其他任何话题,都可以告诉我。我会尽力帮助你。有什么具体的事情是你现在想了解的吗?

User: 我的名字?

Assistant: 你刚刚说你的名字叫张三。如果你有任何问题或者需要帮助,请随时告诉我。无论是生活上的建议、信息查询还是其他任何事情,我都在这里帮助你。有什么特别的事情是你想要了解或讨论的吗?

(2)代码实现

实现记忆功能,需要添加一个配置,代表某一个聊天记录的id;添加memory组件,添加到检查点。修改如下:

from langgraph.checkpoint.memory import MemorySaver

# 创建内存

memory = MemorySaver()

# 创建用户配置

config = {"configurable": {"thread_id": "1"}}

# 图的编译指定检查点为记忆

graph = graph_builder.compile(checkpointer=memory)全部代码

import os

from langchain_openai import ChatOpenAI

from langchain_core.tools import tool

from langgraph.graph import StateGraph, START, END, MessagesState

from langgraph.prebuilt import ToolNode, tools_condition

from langgraph.checkpoint.memory import MemorySaver

# 创建内存

memory = MemorySaver()

# 创建用户配置

config = {"configurable": {"thread_id": "1"}}

# 指定大模型的API Key 等相关信息

llm = ChatOpenAI(

base_url="https://lxxxxx.enovo.com/v1/",

api_key="sxxxxxxxwW",

model_name="qwen2.5-instruct"

)

# 添加LangSmith的API Key

os.environ["LANGCHAIN_TRACING_V2"] = "true"

os.environ["LANGCHAIN_API_KEY"] = "lsv2_pt_1dee022xxxxxxxxxx05954"

os.environ["LANGCHAIN_PROJECT"] = "chatbot_test"

# 定义图

graph_builder = StateGraph(MessagesState)

# 定义工具节点

@tool

def get_weather(location: str):

"""获取当前城市天气。"""

if location in ["上海", "北京"]:

return f"当前{location}天气晴朗,温度为21℃"

else:

return "该城市未知,不在地球上"

@tool

def get_coolest_cities():

"""获取中国最冷的城市"""

return "黑龙江漠河气温在-30℃"

tools = [get_weather, get_coolest_cities]

tool_node = ToolNode(tools)

llm_with_tools = llm.bind_tools(tools)

# 定义节点

def chatbot(state: MessagesState):

return {"messages": [llm_with_tools.invoke(state["messages"])]}

# 在图中添加节点

graph_builder.add_node("chatbot", chatbot)

graph_builder.add_node("tools", tool_node)

# 指定起始边

graph_builder.add_edge(START, "chatbot")

# 添加条件边

graph_builder.add_conditional_edges("chatbot", tools_condition, ["tools", END])

# 指定结束边

graph_builder.add_edge("tools", "chatbot")

# 图的编译指定检查点为记忆

graph = graph_builder.compile(checkpointer=memory)

qa_async_png = graph.get_graph().draw_mermaid_png()

with open("chatbot.png", "wb") as f:

f.write(qa_async_png)

# 以流式调用图

def stream_graph_updates(user_input: str):

for event in graph.stream({"messages": [{"role": "user", "content": user_input}]}, config=config):

for value in event.values():

print("Assistant:", value["messages"][-1].content)

# 设置聊天退出方法

while True:

try:

user_input = input("User: ")

if user_input.lower() in ["quit", "exit", "q"]:

print("Goodbye!")

break

stream_graph_updates(user_input)

except:

# fallback if input() is not available

user_input = "What do you know about LangGraph?"

print("User: " + user_input)

stream_graph_updates(user_input)

break

通过详细配置config这个参数,可以实现不同人、不同时段的聊天分开。

4、人机协作

该功能能够实现在循环中,人能够手动参与进来,实现人机交互。该功能使用interrupt函数实现graph的中断,使用Command命令的resume重新启动,当然还有update、goto参数,源码中有相关说明。

(1)代码实现

# 导入中断和重新启动所需的模块

from langgraph.types import Command, interrupt, Interrupt

# 设置中断的工具

@tool

def human_assistance(query: str) -> str:

"""添加中断(人机交互)工具"""

human_response = interrupt({"query": query})

return human_response["data"]

# 配置中断后,重新启动的方法

def test_interupt():

human_response = (

"We, the experts are here to help! We'd recommend you check out LangGraph to build your agent."

" It's much more reliable and extensible than simple autonomous agents."

)

human_command = Command(resume={"data": human_response})

events = graph.stream(human_command, config, stream_mode="values")

for event in events:

if "messages" in event:

event["messages"][-1].pretty_print()

# 判断中断,若是中断,则重新启动

if isinstance(value, tuple) and isinstance(value[0], Interrupt):

try:

test_interupt()

except Exception as e:

print(e)

else:

print("Assistant:", value["messages"][-1].content)

# 实现中断的问题

# I need some expert guidance for building an AI agent. Could you request assistance for me?添加上述代码,实现的功能是:

当使用上述问题后,会调用human_assistance工具,实现中断,通过获取返回内容,抓取中断,并重新启动graph,用来实现while True继续循环

整体代码如下:

import os

from langchain_openai import ChatOpenAI

from langchain_core.tools import tool

from langgraph.graph import StateGraph, START, END, MessagesState

from langgraph.prebuilt import ToolNode, tools_condition

from langgraph.checkpoint.memory import MemorySaver

from langgraph.types import Command, interrupt, Interrupt

# 创建内存

memory = MemorySaver()

# 创建用户配置

config = {"configurable": {"thread_id": "1"}}

# 指定大模型的API Key 等相关信息

llm = ChatOpenAI(

base_url="https://lxxxxx.enovo.com/v1/",

api_key="sxxxxxxxwW",

model_name="qwen2.5-instruct"

)

# 添加LangSmith的API Key

os.environ["LANGCHAIN_TRACING_V2"] = "true"

os.environ["LANGCHAIN_API_KEY"] = "lsv2_pt_1dee022xxxxxxxxxx05954"

os.environ["LANGCHAIN_PROJECT"] = "chatbot_test"

# 定义图

graph_builder = StateGraph(MessagesState)

# 定义工具节点

@tool

def get_weather(location: str):

"""获取当前城市天气。"""

if location in ["上海", "北京"]:

return f"当前{location}天气晴朗,温度为21℃"

else:

return "该城市未知,不在地球上"

@tool

def get_coolest_cities():

"""获取中国最冷的城市"""

return "黑龙江漠河气温在-30℃"

@tool

def human_assistance(query: str) -> str:

"""添加中断(人机交互)工具"""

human_response = interrupt({"query": query})

return human_response["data"]

tools = [get_weather, get_coolest_cities, human_assistance]

tool_node = ToolNode(tools)

llm_with_tools = llm.bind_tools(tools)

# 定义节点

def chatbot(state: MessagesState):

return {"messages": [llm_with_tools.invoke(state["messages"])]}

# 在图中添加节点

graph_builder.add_node("chatbot", chatbot)

graph_builder.add_node("tools", tool_node)

# 指定起始边

graph_builder.add_edge(START, "chatbot")

# 添加条件边

graph_builder.add_conditional_edges("chatbot", tools_condition, ["tools", END])

# 指定结束边

graph_builder.add_edge("tools", "chatbot")

# 图的编译指定检查点为记忆

graph = graph_builder.compile(checkpointer=memory)

# user_input = "I need some expert guidance for building an AI agent. Could you request assistance for me?"

config = {"configurable": {"thread_id": "1"}}

def test_interupt():

human_response = (

"We, the experts are here to help! We'd recommend you check out LangGraph to build your agent."

" It's much more reliable and extensible than simple autonomous agents."

)

human_command = Command(resume={"data": human_response})

events = graph.stream(human_command, config, stream_mode="values")

for event in events:

if "messages" in event:

event["messages"][-1].pretty_print()

# 以流式调用图

def stream_graph_updates(user_input: str):

for event in graph.stream({"messages": [{"role": "user", "content": user_input}]}, config=config):

for value in event.values():

if isinstance(value, tuple) and isinstance(value[0], Interrupt):

try:

test_interupt()

except Exception as e:

print(e)

else:

print("Assistant:", value["messages"][-1].content)

# 设置聊天退出方法

while True:

try:

user_input = input("User: ")

if user_input.lower() in ["quit", "exit", "q"]:

print("Goodbye!")

break

stream_graph_updates(user_input)

except:

# fallback if input() is not available

user_input = "What do you know about LangGraph?"

print("User: " + user_input)

stream_graph_updates(user_input)

break

(2)输出

User: I need some expert guidance for building an AI agent. Could you request assistance for me?

Assistant:

================================== Ai Message ==================================

Tool Calls:

human_assistance (call_9f2f6a79-cfe1-4690-a603-bac6b12f186a)

Call ID: call_9f2f6a79-cfe1-4690-a603-bac6b12f186a

Args:

query: I need some expert guidance for building an AI agent.

================================= Tool Message =================================

Name: human_assistance

We, the experts are here to help! We'd recommend you check out LangGraph to build your agent. It's much more reliable and extensible than simple autonomous agents.

================================== Ai Message ==================================

We have an expert response for you! They recommend checking out LangGraph for building your AI agent. LangGraph is touted as being much more reliable and extensible compared to simpler autonomous agents.

User: 通过上述方式,可以实现人机交互

注意:想要实现中断的关键是问题,什么样的问题能调用human_assistance工具才是最重要的

5、自定义状态(state)

此处的状态(state)是节点流转的信息保存器,所以此处主要说明state如何增加、更新、获取。

(1)自动更新state

实现以下功能,通过问题调用获取日期的工具,然后通过chatbot检验该答案是否正确,并输出相应的结果。同时将结果保存到state中,如下图

创建一个获取LangGraph诞生日期的工具,并添加到tools中

@tool

def get_langgraph_birthday():

"""获取langgraph的诞生日期"""

return {"birthday": "2023-01-01"}修改human_assistance工具的功能,添加更新state的功能

@tool

def human_assistance(name: str, birthday: str, tool_call_id: Annotated[str, InjectedToolCallId]) -> str:

"""添加中断(人机交互)工具,更新state"""

human_response = interrupt(

{

"question": "Is this correct?",

"name": name,

"birthday": birthday,

},

)

# 如果信息正确,请按原样更新状态.

if human_response.get("correct", "").lower().startswith("y"):

verified_name = name

verified_birthday = birthday

response = "Correct"

# 否则,从人工输入那里接收信息.

else:

verified_name = human_response.get("name", name)

verified_birthday = human_response.get("birthday", birthday)

response = f"Made a correction: {human_response}"

# 这一次,我们在工具中使用 ToolMessage 显式更新状态。

state_update = {

"name": verified_name,

"birthday": verified_birthday,

"messages": [ToolMessage(response, tool_call_id=tool_call_id)],

}

# 我们在工具中返回一个 Command 对象来更新我们的状态。

return Command(update=state_update)添加重启打断的graph,并提供正确日期

def test_interupt():

human_command = Command(

resume={

"name": "LangGraph",

"birthday": "Jan 17, 2024",

},

)

events = graph.stream(human_command, config, stream_mode="values")

for event in events:

if "messages" in event:

event["messages"][-1].pretty_print()

整体代码

import os

from typing import Annotated

from langchain_openai import ChatOpenAI

from langchain_core.messages import ToolMessage

from langchain_core.tools import tool, InjectedToolCallId

from langgraph.graph import StateGraph, START, END, MessagesState

from langgraph.prebuilt import ToolNode, tools_condition

from langgraph.checkpoint.memory import MemorySaver

from langgraph.types import Command, interrupt, Interrupt

# 创建内存

memory = MemorySaver()

# 创建状态

class State(MessagesState):

name: str

birthday: str

# 创建用户配置

config = {"configurable": {"thread_id": "1"}}

# 指定大模型的API Key 等相关信息

llm = ChatOpenAI(

base_url="https://lxxxxx.enovo.com/v1/",

api_key="sxxxxxxxwW",

model_name="qwen2.5-instruct"

)

# 添加LangSmith的API Key

os.environ["LANGCHAIN_TRACING_V2"] = "true"

os.environ["LANGCHAIN_API_KEY"] = "lsv2_pt_1dee022xxxxxxxxxx05954"

os.environ["LANGCHAIN_PROJECT"] = "chatbot_test"

# 定义图

graph_builder = StateGraph(State)

# 定义工具节点

@tool

def get_weather(location: str):

"""获取当前城市天气。"""

if location in ["上海", "北京"]:

return f"当前{location}天气晴朗,温度为21℃"

else:

return "该城市未知,不在地球上"

@tool

def get_coolest_cities():

"""获取中国最冷的城市"""

return "黑龙江漠河气温在-30℃"

@tool

def get_langgraph_birthday():

"""获取langgraph的诞生日期"""

return {"birthday": "2023-01-01"}

@tool

def human_assistance(name: str, birthday: str, tool_call_id: Annotated[str, InjectedToolCallId]) -> str:

"""添加中断(人机交互)工具,更新state"""

human_response = interrupt(

{

"question": "Is this correct?",

"name": name,

"birthday": birthday,

},

)

# 如果信息正确,请按原样更新状态.

if human_response.get("correct", "").lower().startswith("y"):

verified_name = name

verified_birthday = birthday

response = "Correct"

# 否则,从人工输入那里接收信息.

else:

verified_name = human_response.get("name", name)

verified_birthday = human_response.get("birthday", birthday)

response = f"Made a correction: {human_response}"

# 这一次,我们在工具中使用 ToolMessage 显式更新状态。

state_update = {

"name": verified_name,

"birthday": verified_birthday,

"messages": [ToolMessage(response, tool_call_id=tool_call_id)],

}

# 我们在工具中返回一个 Command 对象来更新我们的状态。

return Command(update=state_update)

tools = [get_weather, get_coolest_cities, human_assistance, get_langgraph_birthday]

tool_node = ToolNode(tools)

llm_with_tools = llm.bind_tools(tools)

# 定义节点

def chatbot(state: State):

return {"messages": [llm_with_tools.invoke(state["messages"])]}

# 在图中添加节点

graph_builder.add_node("chatbot", chatbot)

graph_builder.add_node("tools", tool_node)

# 指定起始边

graph_builder.add_edge(START, "chatbot")

# 添加条件边

graph_builder.add_conditional_edges("chatbot", tools_condition, ["tools", END])

# 指定结束边

graph_builder.add_edge("tools", "chatbot")

# 图的编译指定检查点为记忆

graph = graph_builder.compile(checkpointer=memory)

# user_input = "I need some expert guidance for building an AI agent. Could you request assistance for me?"

config = {"configurable": {"thread_id": "1"}}

def test_interupt():

human_command = Command(

resume={

"name": "LangGraph",

"birthday": "Jan 17, 2024",

},

)

events = graph.stream(human_command, config, stream_mode="values")

for event in events:

if "messages" in event:

event["messages"][-1].pretty_print()

# 以流式调用图

def stream_graph_updates(user_input: str):

for event in graph.stream({"messages": [{"role": "user", "content": user_input}]}, config=config):

for value in event.values():

if isinstance(value, tuple) and isinstance(value[0], Interrupt):

try:

test_interupt()

except Exception as e:

print(e)

else:

print("Assistant:", value["messages"][-1].content)

# 设置聊天退出方法

while True:

try:

user_input = input("User: ")

if user_input.lower() in ["quit", "exit", "q"]:

print("Goodbye!")

break

stream_graph_updates(user_input)

except:

# fallback if input() is not available

user_input = "What do you know about LangGraph?"

print("User: " + user_input)

stream_graph_updates(user_input)

break

输出

User: Can you look up when LangGraph was released? When you have the answer, use the human_assistance tool for review.

Assistant:

================================== Ai Message ==================================

Tool Calls:

get_langgraph_birthday (call_788ea39a-fd9c-48c1-9369-4ccfe874db7c)

Call ID: call_788ea39a-fd9c-48c1-9369-4ccfe874db7c

Args:

human_assistance (call_788ea39a-fd9c-48c1-9369-4ccfe874db7c)

Call ID: call_788ea39a-fd9c-48c1-9369-4ccfe874db7c

Args:

name: LangGraph Release Date

birthday:

{'name': 'LangGraph', 'birthday': 'Jan 17, 2024'}

================================= Tool Message =================================

Name: human_assistance

Made a correction: {'name': 'LangGraph', 'birthday': 'Jan 17, 2024'}

================================== Ai Message ==================================

LangGraph was released on January 17, 2024. This information has been reviewed and confirmed.(2)手动更新state

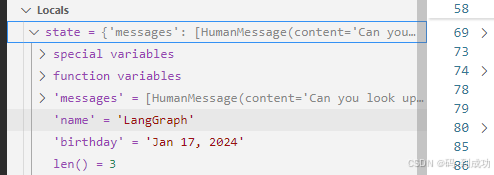

此处使用debug模式下的调试来说明

通过get_state获取当前state中的name、birthday信息

# 获取state内的信息:name、birthday

snapshot = graph.get_state(config)

print({k: v for k, v in snapshot.values.items() if k in ("name", "birthday")})

# 输出内容

{'name': 'LangGraph', 'birthday': 'Jan 17, 2024'}通过update_state更新信息并查看

# 通过update_state更新state中的name信息

graph.update_state(config, {"name": "LangGraph (library)"})

# 执行更新的返回信息

{'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1eff8c07-7f74-6ca4-8004-4791cc3c8205'}}

# 再次获取name、birthday信息

snapshot = graph.get_state(config)

print({k: v for k, v in snapshot.values.items() if k in ("name", "birthday")})

# 输出内容

{'name': 'LangGraph (library)', 'birthday': 'Jan 17, 2024'}显然,state信息中的name发生了变化

6、时间旅行

在一般的聊天机器人工作流程中,用户与机器人进行一次或多次交互以完成某个任务。在前面的部分中,我们看到了如何添加记忆和人机交互来检查我们的图状态并控制未来的响应。

但是,如果你想让用户从之前的响应开始并“分支”以探索不同的结果怎么办?或者如果你想让用户能够“回溯”你的助手的工作以修复某些错误或尝试不同的策略(在诸如自主软件工程师之类的应用程序中很常见)怎么办?

你可以使用LangGraph内置的“时间旅行(Time Travel)”功能来实现相关功能。

此处使用第三部分的代码内容来实现相关功能(文中不在展示,请直接看3、添加记忆能力代码)

(1)对话记录

User: hello

Assistant: Hello! How can I assist you today?

User: 我是jack

Assistant: 很高兴认识你,Jack!有什么我可以帮助你的吗?

User: 我是谁?

Assistant: 你是Jack!刚刚我们还聊到了你。有什么特别的事情是你想要讨论或是需要帮助的吗?

User: 北京的天气怎么样(2)获取所有对话

此处打了断点,使用调试模式来展示时间回溯功能

使用get_state_history方法来获取所有的对话记录

to_replay = None

for state in graph.get_state_history(config):

# 输出所有的messages信息

print("Num Messages: ", len(state.values["messages"]), "Next: ", state.next)

print("-" * 80)

if len(state.values["messages"]) == 6:

to_replay = state(3)相应输出

Num Messages: 7 Next: ('chatbot',)

--------------------------------------------------------------------------------

Num Messages: 6 Next: ('__start__',)

--------------------------------------------------------------------------------

Num Messages: 6 Next: ()

--------------------------------------------------------------------------------

Num Messages: 5 Next: ('chatbot',)

--------------------------------------------------------------------------------

Num Messages: 4 Next: ('__start__',)

--------------------------------------------------------------------------------

Num Messages: 4 Next: ()

--------------------------------------------------------------------------------

Num Messages: 3 Next: ('chatbot',)

--------------------------------------------------------------------------------

Num Messages: 2 Next: ('__start__',)

--------------------------------------------------------------------------------

Num Messages: 2 Next: ()

--------------------------------------------------------------------------------

Num Messages: 1 Next: ('chatbot',)

--------------------------------------------------------------------------------

Num Messages: 0 Next: ('__start__',)

检查点会在图的每一步都被保存,因此你可以回溯整个线程的历史。我们选择了to_replay作为恢复的状态。这是在上面第二个图调用中的chatbot节点之后的状态。

(4)查看相应信息

查看第六个消息的信息

print(to_replay.next)

print(to_replay.config)(5)信息输出

()

{'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1eff8c3c-9e57-6794-8007-07c02ab35005'}}(6)查看对应信息

# 获取第六个消息并输出

for event in graph.stream(None, to_replay.config, stream_mode="values"):

if "messages" in event:

event["messages"][-1].pretty_print()

# pretty_print输出的信息

================================== Ai Message ==================================

你是Jack!刚刚我们还聊到了你。有什么特别的事情是你想要讨论或是需要帮助的吗?三、总结

本文对LangGraph的startup的功能进行了重现,但是代码并未原文复制,进行了部分更改。显然基础或是基本功能实现了,并未详细深入的进行说明。本文仅作为一个demo实现,说明LangGraph的相应功能如何使用,对于具有详细需求的需要在此基础上进行增加代码来实现相应的需求

火山引擎开发者社区是火山引擎打造的AI技术生态平台,聚焦Agent与大模型开发,提供豆包系列模型(图像/视频/视觉)、智能分析与会话工具,并配套评测集、动手实验室及行业案例库。社区通过技术沙龙、挑战赛等活动促进开发者成长,新用户可领50万Tokens权益,助力构建智能应用。

更多推荐

已为社区贡献16条内容

已为社区贡献16条内容

所有评论(0)