【错误记录】CrossEncoder 从 Hugging Face 模型库中下载模型报错 ( ConnectionError: (ProtocolError(‘Connection aborted )

一、报错信息二、问题分析1、查看本地模型缓存2、使用 Python 代码测试网络3、终极解决方案 - 手动下载模型三、解决方案1、网络问题 - 清除代理2、问题解决后成功执行

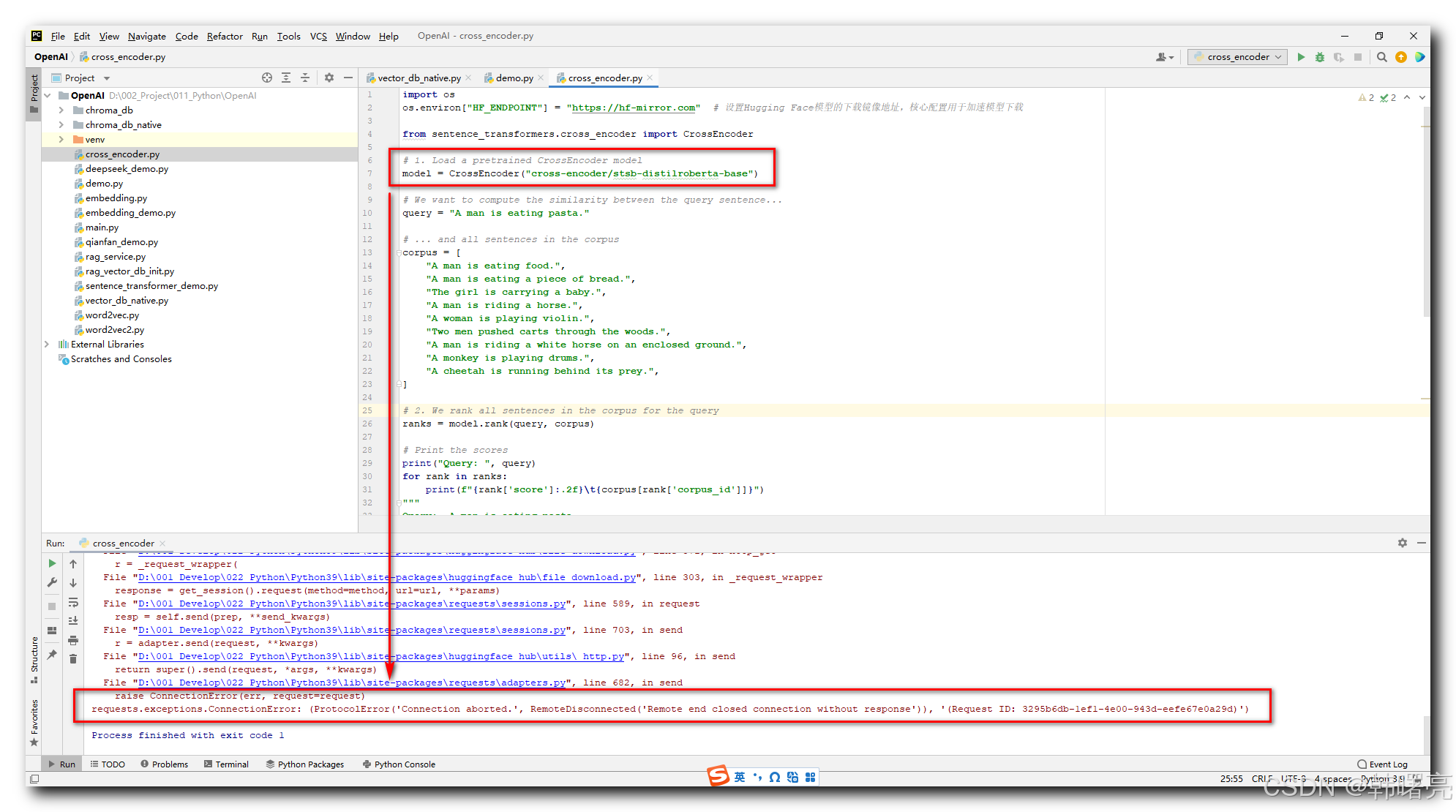

一、报错信息

运行 https://www.sbert.net/docs/quickstart.html 文档中的代码 ,

from sentence_transformers.cross_encoder import CrossEncoder

# 1. Load a pretrained CrossEncoder model

model = CrossEncoder("cross-encoder/stsb-distilroberta-base")

# We want to compute the similarity between the query sentence...

query = "A man is eating pasta."

# ... and all sentences in the corpus

corpus = [

"A man is eating food.",

"A man is eating a piece of bread.",

"The girl is carrying a baby.",

"A man is riding a horse.",

"A woman is playing violin.",

"Two men pushed carts through the woods.",

"A man is riding a white horse on an enclosed ground.",

"A monkey is playing drums.",

"A cheetah is running behind its prey.",

]

# 2. We rank all sentences in the corpus for the query

ranks = model.rank(query, corpus)

# Print the scores

print("Query: ", query)

for rank in ranks:

print(f"{rank['score']:.2f}\t{corpus[rank['corpus_id']]}")

"""

Query: A man is eating pasta.

0.67 A man is eating food.

0.34 A man is eating a piece of bread.

0.08 A man is riding a horse.

0.07 A man is riding a white horse on an enclosed ground.

0.01 The girl is carrying a baby.

0.01 Two men pushed carts through the woods.

0.01 A monkey is playing drums.

0.01 A woman is playing violin.

0.01 A cheetah is running behind its prey.

"""

# 3. Alternatively, you can also manually compute the score between two sentences

import numpy as np

sentence_combinations = [[query, sentence] for sentence in corpus]

scores = model.predict(sentence_combinations)

# Sort the scores in decreasing order to get the corpus indices

ranked_indices = np.argsort(scores)[::-1]

print("Scores:", scores)

print("Indices:", ranked_indices)

"""

Scores: [0.6732372, 0.34102544, 0.00542465, 0.07569341, 0.00525378, 0.00536814, 0.06676237, 0.00534825, 0.00516717]

Indices: [0 1 3 6 2 5 7 4 8]

"""

为其配置 Hugging Face 的国内镜像 ,

import os

os.environ["HF_ENDPOINT"] = "https://hf-mirror.com" # 设置Hugging Face模型的下载镜像地址,核心配置用于加速模型下载

最终运行还是报错 :

D:\001_Develop\022_Python\Python39\python.exe D:/002_Project/011_Python/OpenAI/cross_encoder.py

Traceback (most recent call last):

File "D:\001_Develop\022_Python\Python39\lib\site-packages\urllib3\connectionpool.py", line 787, in urlopen

response = self._make_request(

File "D:\001_Develop\022_Python\Python39\lib\site-packages\urllib3\connectionpool.py", line 534, in _make_request

response = conn.getresponse()

File "D:\001_Develop\022_Python\Python39\lib\site-packages\urllib3\connection.py", line 516, in getresponse

httplib_response = super().getresponse()

File "D:\001_Develop\022_Python\Python39\lib\http\client.py", line 1371, in getresponse

response.begin()

File "D:\001_Develop\022_Python\Python39\lib\http\client.py", line 319, in begin

version, status, reason = self._read_status()

File "D:\001_Develop\022_Python\Python39\lib\http\client.py", line 288, in _read_status

raise RemoteDisconnected("Remote end closed connection without"

http.client.RemoteDisconnected: Remote end closed connection without response

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "D:\001_Develop\022_Python\Python39\lib\site-packages\requests\adapters.py", line 667, in send

resp = conn.urlopen(

File "D:\001_Develop\022_Python\Python39\lib\site-packages\urllib3\connectionpool.py", line 841, in urlopen

retries = retries.increment(

File "D:\001_Develop\022_Python\Python39\lib\site-packages\urllib3\util\retry.py", line 474, in increment

raise reraise(type(error), error, _stacktrace)

File "D:\001_Develop\022_Python\Python39\lib\site-packages\urllib3\util\util.py", line 38, in reraise

raise value.with_traceback(tb)

File "D:\001_Develop\022_Python\Python39\lib\site-packages\urllib3\connectionpool.py", line 787, in urlopen

response = self._make_request(

File "D:\001_Develop\022_Python\Python39\lib\site-packages\urllib3\connectionpool.py", line 534, in _make_request

response = conn.getresponse()

File "D:\001_Develop\022_Python\Python39\lib\site-packages\urllib3\connection.py", line 516, in getresponse

httplib_response = super().getresponse()

File "D:\001_Develop\022_Python\Python39\lib\http\client.py", line 1371, in getresponse

response.begin()

File "D:\001_Develop\022_Python\Python39\lib\http\client.py", line 319, in begin

version, status, reason = self._read_status()

File "D:\001_Develop\022_Python\Python39\lib\http\client.py", line 288, in _read_status

raise RemoteDisconnected("Remote end closed connection without"

urllib3.exceptions.ProtocolError: ('Connection aborted.', RemoteDisconnected('Remote end closed connection without response'))

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "D:\002_Project\011_Python\OpenAI\cross_encoder.py", line 7, in <module>

model = CrossEncoder("cross-encoder/stsb-distilroberta-base")

File "D:\001_Develop\022_Python\Python39\lib\site-packages\sentence_transformers\cross_encoder\CrossEncoder.py", line 82, in __init__

self.config = AutoConfig.from_pretrained(

File "D:\001_Develop\022_Python\Python39\lib\site-packages\transformers\models\auto\configuration_auto.py", line 1075, in from_pretrained

config_dict, unused_kwargs = PretrainedConfig.get_config_dict(pretrained_model_name_or_path, **kwargs)

File "D:\001_Develop\022_Python\Python39\lib\site-packages\transformers\configuration_utils.py", line 594, in get_config_dict

config_dict, kwargs = cls._get_config_dict(pretrained_model_name_or_path, **kwargs)

File "D:\001_Develop\022_Python\Python39\lib\site-packages\transformers\configuration_utils.py", line 653, in _get_config_dict

resolved_config_file = cached_file(

File "D:\001_Develop\022_Python\Python39\lib\site-packages\transformers\utils\hub.py", line 342, in cached_file

resolved_file = hf_hub_download(

File "D:\001_Develop\022_Python\Python39\lib\site-packages\huggingface_hub\utils\_validators.py", line 114, in _inner_fn

return fn(*args, **kwargs)

File "D:\001_Develop\022_Python\Python39\lib\site-packages\huggingface_hub\file_download.py", line 862, in hf_hub_download

return _hf_hub_download_to_cache_dir(

File "D:\001_Develop\022_Python\Python39\lib\site-packages\huggingface_hub\file_download.py", line 1011, in _hf_hub_download_to_cache_dir

_download_to_tmp_and_move(

File "D:\001_Develop\022_Python\Python39\lib\site-packages\huggingface_hub\file_download.py", line 1547, in _download_to_tmp_and_move

http_get(

File "D:\001_Develop\022_Python\Python39\lib\site-packages\huggingface_hub\file_download.py", line 371, in http_get

r = _request_wrapper(

File "D:\001_Develop\022_Python\Python39\lib\site-packages\huggingface_hub\file_download.py", line 303, in _request_wrapper

response = get_session().request(method=method, url=url, **params)

File "D:\001_Develop\022_Python\Python39\lib\site-packages\requests\sessions.py", line 589, in request

resp = self.send(prep, **send_kwargs)

File "D:\001_Develop\022_Python\Python39\lib\site-packages\requests\sessions.py", line 703, in send

r = adapter.send(request, **kwargs)

File "D:\001_Develop\022_Python\Python39\lib\site-packages\huggingface_hub\utils\_http.py", line 96, in send

return super().send(request, *args, **kwargs)

File "D:\001_Develop\022_Python\Python39\lib\site-packages\requests\adapters.py", line 682, in send

raise ConnectionError(err, request=request)

requests.exceptions.ConnectionError: (ProtocolError('Connection aborted.', RemoteDisconnected('Remote end closed connection without response')), '(Request ID: 3295b6db-1ef1-4e00-943d-eefe67e0a29d)')

Process finished with exit code 1

核心报错信息如下 :

requests.exceptions.ConnectionError: (ProtocolError('Connection aborted.', RemoteDisconnected('Remote end closed connection without response')), '(Request ID: 3295b6db-1ef1-4e00-943d-eefe67e0a29d)')

requests.exceptions.ConnectionError: (

ProtocolError(‘Connection aborted.’, RemoteDisconnected(

‘Remote end closed connection without response’)), ‘(

Request ID: 3295b6db-1ef1-4e00-943d-eefe67e0a29d)’)

二、问题分析

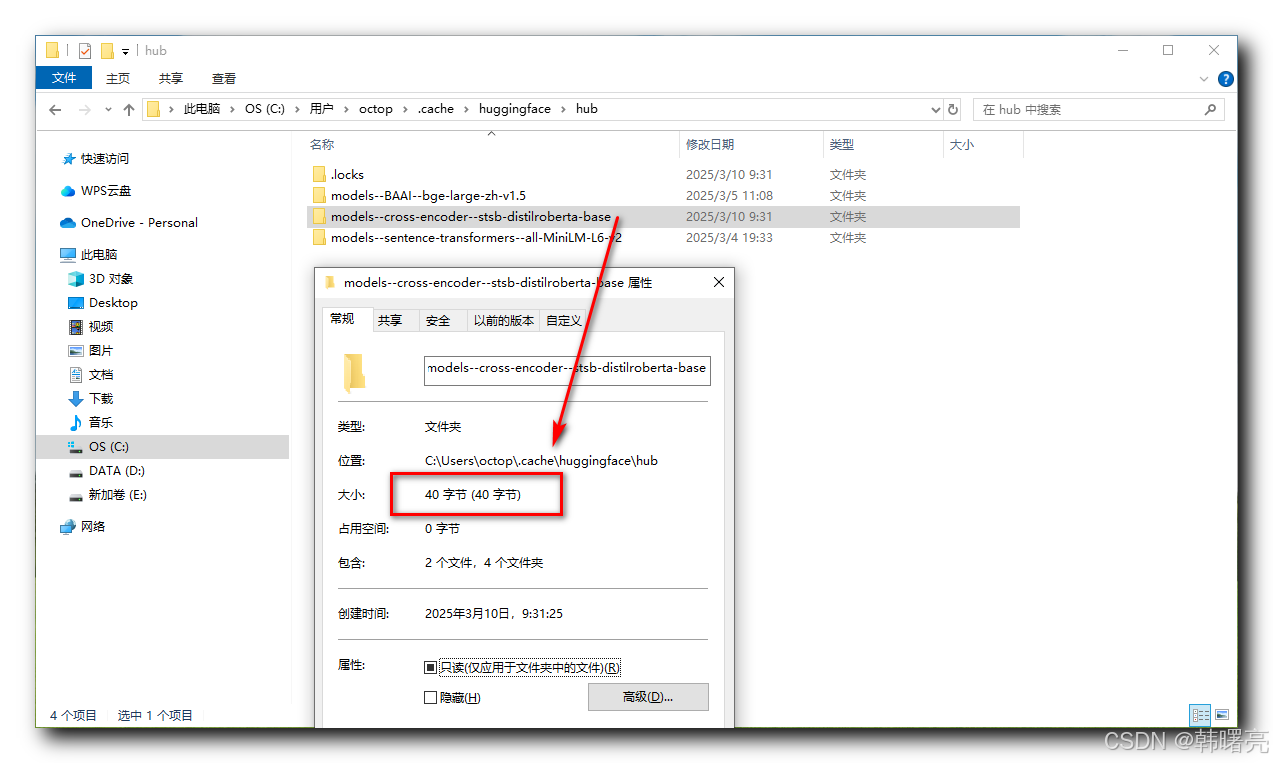

1、查看本地模型缓存

执行

import os

os.environ["HF_ENDPOINT"] = "https://hf-mirror.com" # 设置Hugging Face模型的下载镜像地址,核心配置用于加速模型下载

from sentence_transformers.cross_encoder import CrossEncoder

# 1. Load a pretrained CrossEncoder model

model = CrossEncoder("cross-encoder/stsb-distilroberta-base")

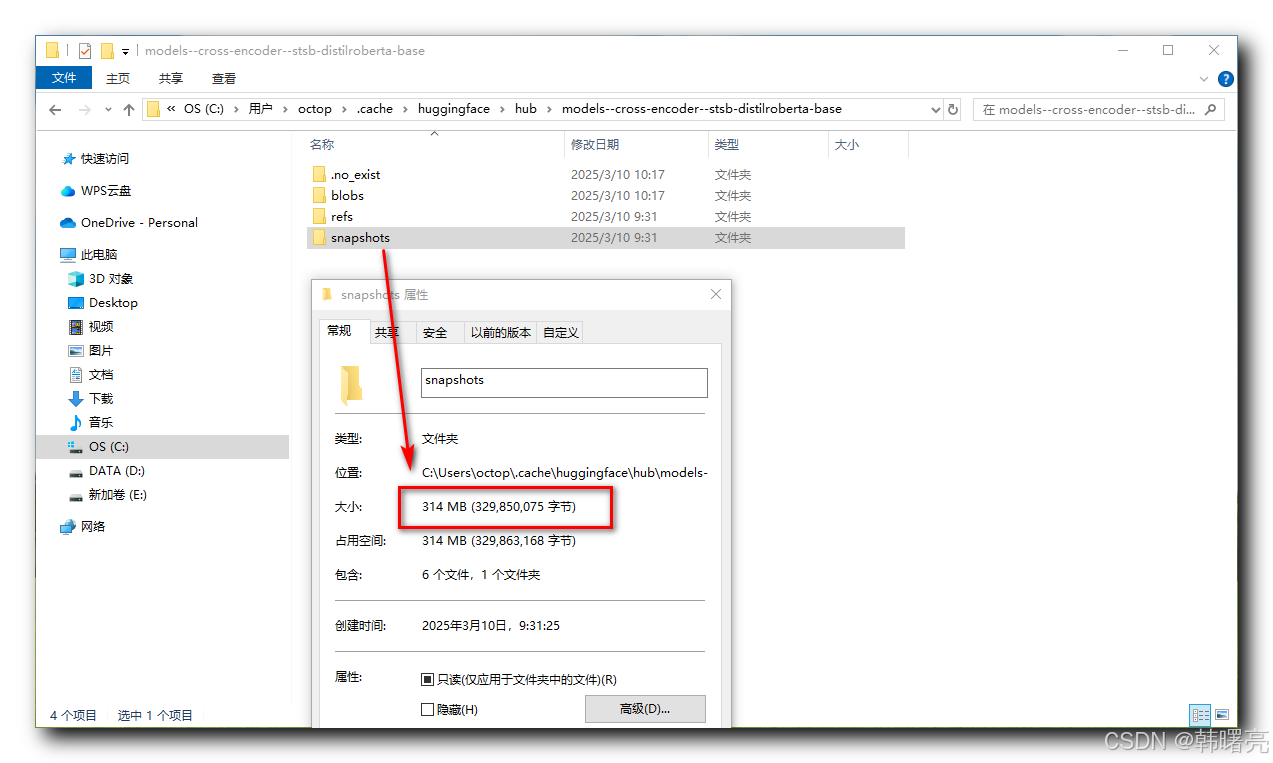

代码 , 下载 “cross-encoder/stsb-distilroberta-base” 模型 , 本地下载的模型目录是 C:\Users\octop.cache\huggingface\hub\models–cross-encoder–stsb-distilroberta-base 地址 ,

可能是本地缓存被破坏 , 可以手动删除损坏的缓存文件 , 或者使用如下代码 删除缓存文件 ;

from huggingface_hub import snapshot_download

# 删除旧缓存并重新下载

model_path = snapshot_download(

"cross-encoder/stsb-distilroberta-base",

force_download=True, # 强制重新下载

resume_download=False # 禁用断点续传

)

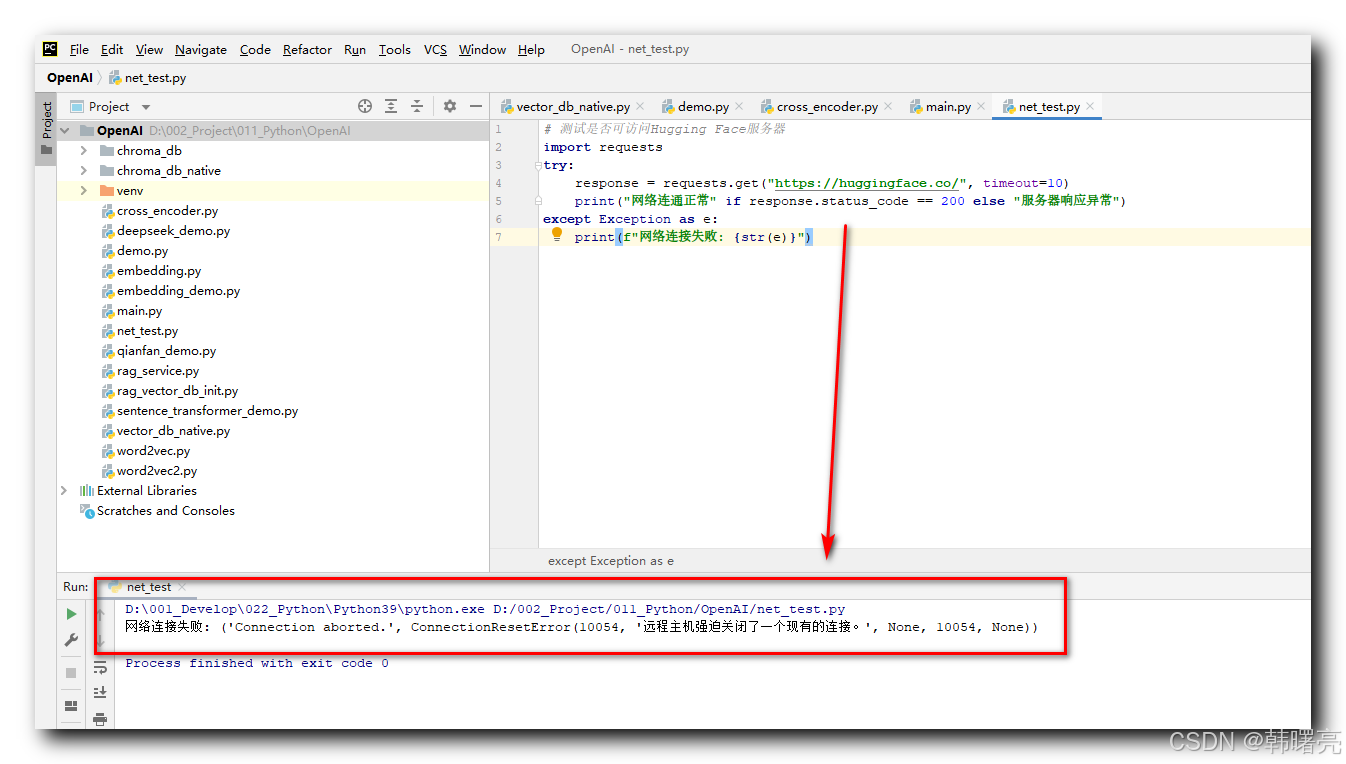

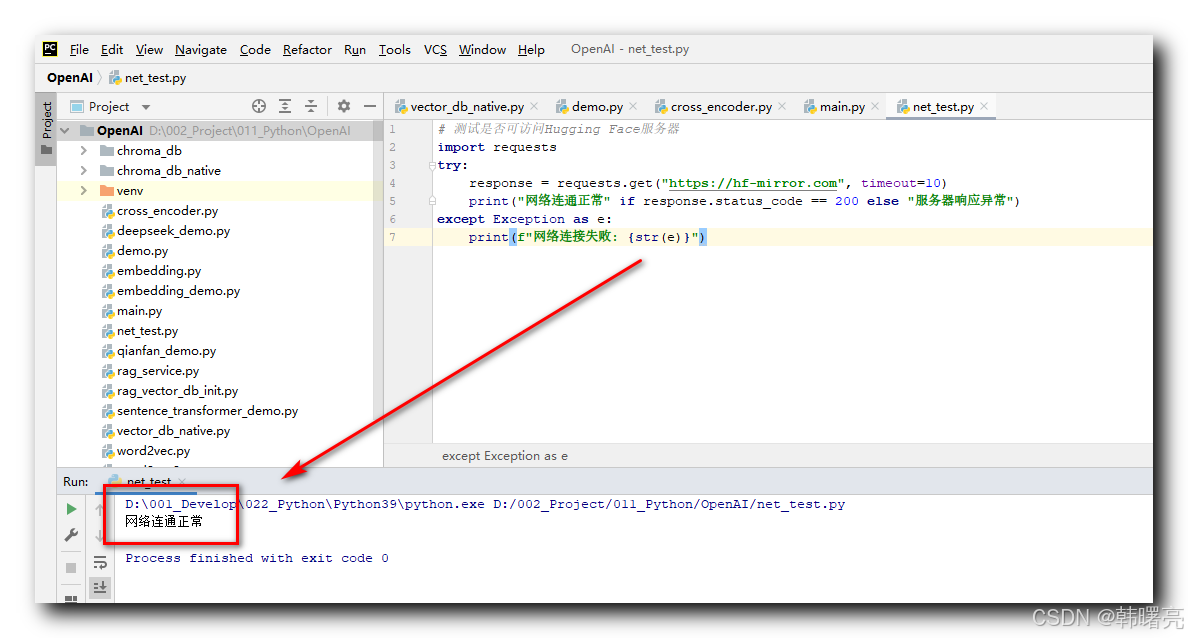

2、使用 Python 代码测试网络

在代码中 检查网络连通性 , 使用 request 库中的 requests.get 函数 , 测试网络连通性 ;

在 PyCharm 中执行下面的代码 :

# 测试是否可访问Hugging Face服务器

import requests

try:

response = requests.get("https://huggingface.co/", timeout=10)

print("网络连通正常" if response.status_code == 200 else "服务器响应异常")

except Exception as e:

print(f"网络连接失败: {str(e)}")

输出结果如下 :

网络连接失败: ('Connection aborted.', ConnectionResetError(10054, '远程主机强迫关闭了一个现有的连接。', None, 10054, None))

遇到网络问题 , 可以进行如下尝试 :

- 切换网络 : 换个 WIFI 连接 , 暂时使用 手机热点 连接 ;

- 设置代理 : 检查 防火墙 / 代理设置 ;

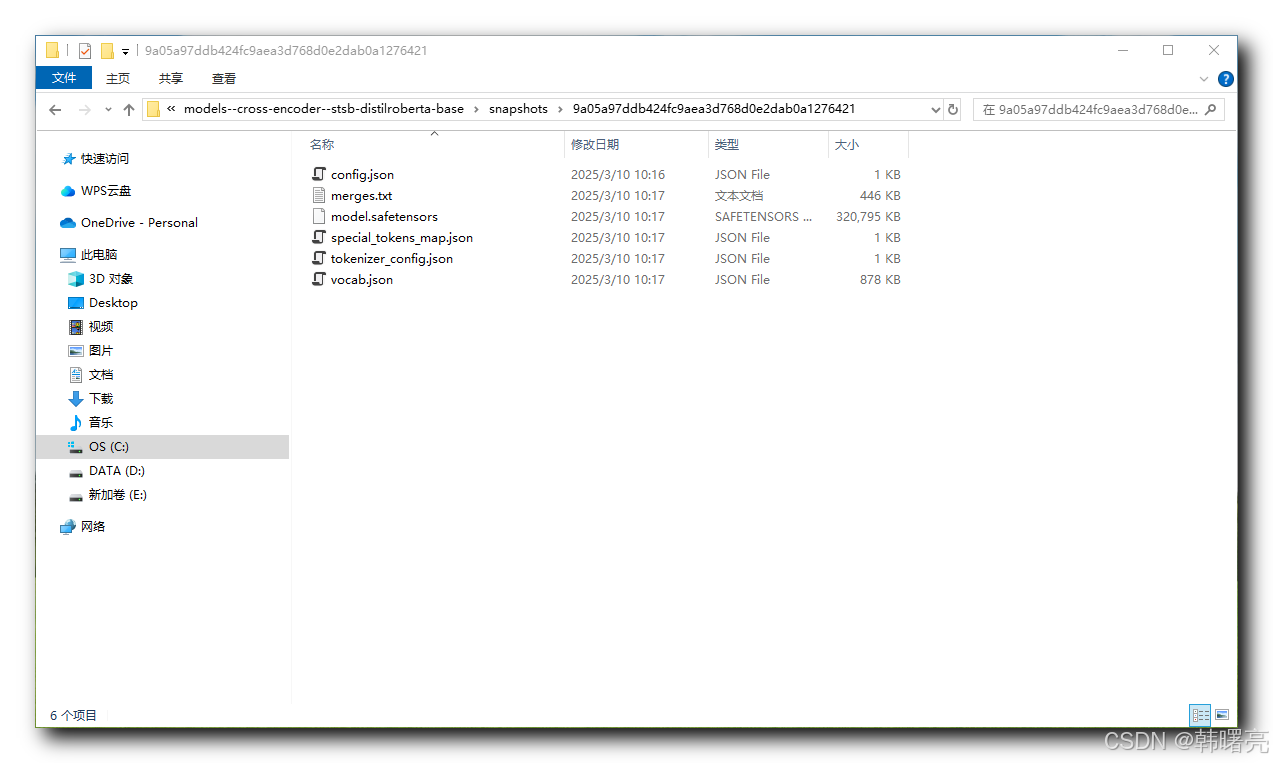

3、终极解决方案 - 手动下载模型

参考 【错误记录】本地部署大模型 从 Hugging Face 的模型库下载模型报错 ( OSError: We couldn‘t connect to ‘https://huggingface.co‘ ) 三、解决方案 1、解决方案 1 - 手动下载模型 解决方案 , 从 Hugging Face 模型库 https://huggingface.co 中 , 手动下载 模型文件 , 需要下载的模型文件参考 C:\Users\octop.cache\huggingface\hub\models–cross-encoder–stsb-distilroberta-base\snapshots\9a05a97ddb424fc9aea3d768d0e2dab0a1276421 目录 :

这是 成功下载后的 模型文件 ;

如果不知道下载哪个模型文件 , 那就全部下载 , 涉及到目录需要手动创建 , 目录中的文件需要下载下来手动移动到你创建的文件中 ;

三、解决方案

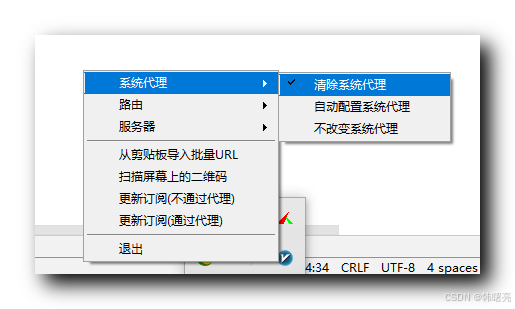

1、网络问题 - 清除代理

遇到网络问题 , 可以进行如下尝试 :

- 切换网络 : 换个 WIFI 连接 , 暂时使用 手机热点 连接 ;

- 设置代理 : 检查 防火墙 / 代理设置 ;

之前使用了 魔法工具 , 没有断开连接 , 直接关机 ;

再次开启 魔法工具 , 清除代理 ;

然后再次执行 如下代码 ,

# 测试是否可访问Hugging Face服务器

import requests

try:

response = requests.get("https://huggingface.co/", timeout=10)

print("网络连通正常" if response.status_code == 200 else "服务器响应异常")

except Exception as e:

print(f"网络连接失败: {str(e)}")

执行结果 :

网络连接失败: HTTPSConnectionPool(host='huggingface.co', port=443): Max retries exceeded with url: / (Caused by ConnectTimeoutError(<urllib3.connection.HTTPSConnection object at 0x000001929BB106A0>, 'Connection to huggingface.co timed out. (connect timeout=10)'))

网络连接失败: HTTPSConnectionPool(host=‘huggingface.co’, port=443):

Max retries exceeded with url: /

(Caused by ConnectTimeoutError

(<urllib3.connection.HTTPSConnection object at 0x000001929BB106A0>,

‘Connection to huggingface.co timed out. (connect timeout=10)’))

将代码中的网址切换成镜像地址 https://hf-mirror.com , 再次执行下面的代码 :

# 测试是否可访问Hugging Face服务器

import requests

try:

response = requests.get("https://hf-mirror.com", timeout=10)

print("网络连通正常" if response.status_code == 200 else "服务器响应异常")

except Exception as e:

print(f"网络连接失败: {str(e)}")

执行结果 :

网络连通正常

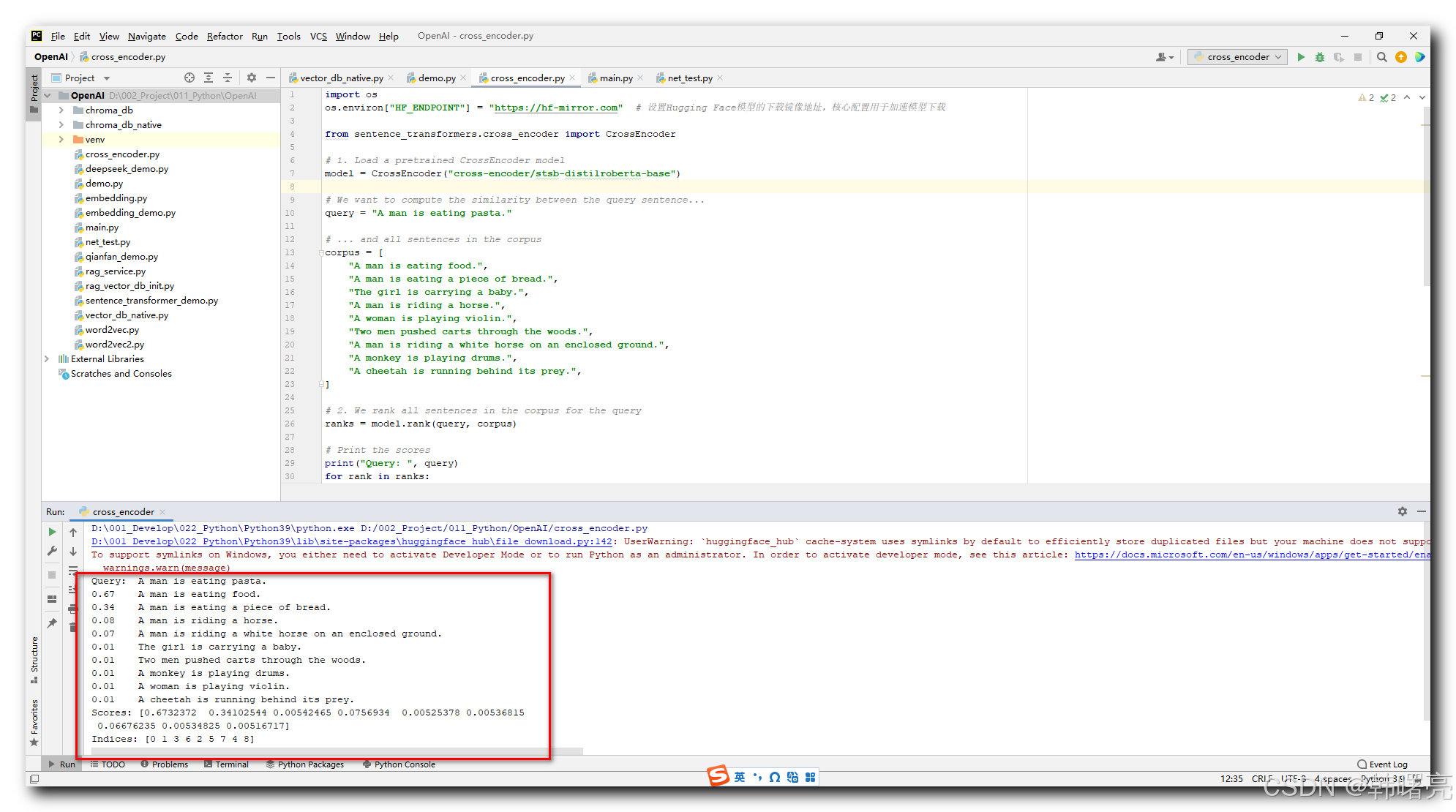

2、问题解决后成功执行

再次执行 如下 设置完镜像 网站的 代码 :

import os

os.environ["HF_ENDPOINT"] = "https://hf-mirror.com" # 设置Hugging Face模型的下载镜像地址,核心配置用于加速模型下载

from sentence_transformers.cross_encoder import CrossEncoder

# 1. Load a pretrained CrossEncoder model

model = CrossEncoder("cross-encoder/stsb-distilroberta-base")

# We want to compute the similarity between the query sentence...

query = "A man is eating pasta."

# ... and all sentences in the corpus

corpus = [

"A man is eating food.",

"A man is eating a piece of bread.",

"The girl is carrying a baby.",

"A man is riding a horse.",

"A woman is playing violin.",

"Two men pushed carts through the woods.",

"A man is riding a white horse on an enclosed ground.",

"A monkey is playing drums.",

"A cheetah is running behind its prey.",

]

# 2. We rank all sentences in the corpus for the query

ranks = model.rank(query, corpus)

# Print the scores

print("Query: ", query)

for rank in ranks:

print(f"{rank['score']:.2f}\t{corpus[rank['corpus_id']]}")

"""

Query: A man is eating pasta.

0.67 A man is eating food.

0.34 A man is eating a piece of bread.

0.08 A man is riding a horse.

0.07 A man is riding a white horse on an enclosed ground.

0.01 The girl is carrying a baby.

0.01 Two men pushed carts through the woods.

0.01 A monkey is playing drums.

0.01 A woman is playing violin.

0.01 A cheetah is running behind its prey.

"""

# 3. Alternatively, you can also manually compute the score between two sentences

import numpy as np

sentence_combinations = [[query, sentence] for sentence in corpus]

scores = model.predict(sentence_combinations)

# Sort the scores in decreasing order to get the corpus indices

ranked_indices = np.argsort(scores)[::-1]

print("Scores:", scores)

print("Indices:", ranked_indices)

"""

Scores: [0.6732372, 0.34102544, 0.00542465, 0.07569341, 0.00525378, 0.00536814, 0.06676237, 0.00534825, 0.00516717]

Indices: [0 1 3 6 2 5 7 4 8]

"""

执行结果如下 :

查看 C:\Users\octop.cache\huggingface\hub\models–cross-encoder–stsb-distilroberta-base 模型目录 , 就是 下载后 314 MB 的 模型 ;

火山引擎开发者社区是火山引擎打造的AI技术生态平台,聚焦Agent与大模型开发,提供豆包系列模型(图像/视频/视觉)、智能分析与会话工具,并配套评测集、动手实验室及行业案例库。社区通过技术沙龙、挑战赛等活动促进开发者成长,新用户可领50万Tokens权益,助力构建智能应用。

更多推荐

已为社区贡献28条内容

已为社区贡献28条内容

所有评论(0)